Synthetic Intelligence (AI) is changing into more and more ubiquitous in our lives, from voice assistants in our smartphones to self-driving automobiles on our roads. Whereas the advantages of AI are quite a few, its growth and deployment elevate vital moral points. One space of specific concern is the programming of AI “folks of colour” by White builders. The dearth of range in AI growth groups and datasets used to coach AI fashions can perpetuate and amplify present systemic biases, resulting in discrimination in opposition to marginalized communities. This piece will contact upon the moral concerns of AI “folks of colour” being programmed by White builders — together with the influence on range, equity, accountability, and transparency — as a immediate for additional consideration and dialogue.

Range

Probably the most vital moral concerns of AI “folks of colour” being programmed by White builders is the shortage of range in growth groups. The tech business is infamous for its lack of range, with solely 7% of AI researchers being non-White, in line with a 2020 examine by the AI Now Institute. This lack of range can have critical implications for the event of AI methods that serve all communities equitably.

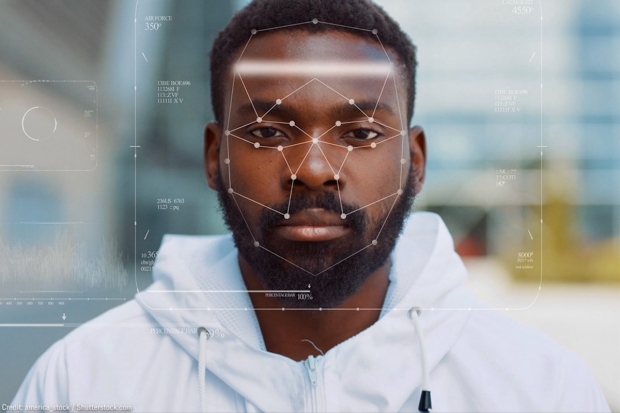

For instance, facial recognition algorithms have been proven to be much less correct in figuring out folks with darker pores and skin tones. The underlying motive is that the datasets used to coach these algorithms are predominantly pictures of lighter-skinned people. This bias has resulted within the misidentification of individuals of colour by regulation enforcement businesses and has led to requires a ban on utilizing facial recognition expertise by regulation enforcement. These misidentifications can have critical penalties, together with wrongful arrests and even violence in opposition to harmless folks.

An absence of range within the growth staff may also lead to a lack of expertise of the cultural nuances and complexities of points confronted by folks of colour. As an example, an AI system developed by a White staff might not acknowledge sure cultural expressions, language use, or dialects. In consequence, the system might misread information and create bias, adversely affecting folks of colour.

To handle these points, it’s essential to make sure that AI growth groups are various and inclusive. This implies hiring builders from various backgrounds and together with varied views within the growth course of. Moreover, it’s important to make use of various datasets to coach AI fashions to make sure that they precisely characterize and serve the wants of all communities.

Equity

Equity is one other essential moral consideration concerning AI “folks of colour” being programmed by White builders. Equity means guaranteeing that AI methods don’t discriminate in opposition to people or teams primarily based on traits corresponding to race, gender, or ethnicity. The dearth of range in growth groups and datasets used to coach AI fashions can result in biased algorithms that perpetuate and amplify present systemic biases.

As an example, in 2018, it was revealed that Amazon’s AI recruitment device was biased in opposition to girls. The gadget was educated on resumes submitted to Amazon over a 10-year interval, most of which got here from males. In consequence, the system realized to discriminate in opposition to resumes that included phrases corresponding to “girls’s” or “feminine,” resulting in the rejection of certified feminine candidates. This bias was not intentional, however it highlights the significance of guaranteeing that AI methods are designed pretty.

A technique to make sure equity is to check AI methods for bias earlier than deployment. This entails analyzing the algorithm’s output to determine any patterns of discrimination. If bias is recognized, it’s important to deal with it earlier than the system is deployed to make sure that it doesn’t perpetuate or amplify systemic biases.

Accountability

Accountability is one other essential moral consideration concerning AI “folks of colour” being programmed by White builders. Accountability means guaranteeing that builders and organizations are held accountable for the actions of their AI methods. This contains figuring out who’s accountable for designing, growing, and deploying AI methods and guaranteeing that they’re held accountable for any hurt attributable to the system.

Within the case of AI “folks of colour” being programmed by White builders, accountability is important. If an AI system developed by a White staff misidentifies an individual of colour or perpetuates systemic biases, who’s accountable for the hurt triggered? The builders who created the system, the group that deployed it, or each?

To handle these points, it’s important to determine clear traces of duty and accountability for AI methods. This implies guaranteeing that builders are conscious of the potential implications of their work and are held accountable for any hurt attributable to their methods. It additionally signifies that organizations should take duty for the AI methods they deploy and guarantee they’re clear about their use and potential biases.

Transparency

Transparency is one other essential moral consideration concerning AI “folks of colour” being programmed by White builders. Transparency means guaranteeing that AI methods are open and comprehensible to the general public. This contains making the system’s design, growth, and operation clear to make sure that customers perceive how the system works and the way it might have an effect on them.

Within the case of AI “folks of colour” being programmed by White builders, transparency is essential. Individuals of colour could also be extra prone to be affected by biased AI methods, they usually have a proper to know how these methods function and the way they might influence them.

To handle these points, it’s important to make sure that AI methods are clear and open to the general public. This implies offering details about the system’s design, growth, and operation and guaranteeing that customers perceive the way it works and the way it might have an effect on them. Moreover, it means being clear about any biases or limitations of the system to make sure that customers could make knowledgeable selections about its use.

The programming of AI “folks of colour” by White builders raises vital moral concerns, together with range, equity, accountability, and transparency. The dearth of range in growth groups and datasets used to coach AI fashions can perpetuate and amplify present systemic biases, resulting in discrimination in opposition to marginalized communities. To handle these points, it’s essential to make sure that AI growth groups are various and inclusive, use various datasets to coach AI fashions, check AI methods for bias earlier than they’re deployed, set up clear traces of duty and accountability for AI methods, and make sure that AI methods are clear and open to the general public. By addressing these points, we will make sure that AI methods serve all communities equitably and keep away from perpetuating or amplifying systemic biases.