Initially posted on 23 March 2023 for the preview, and up to date with particulars of the ultimate launch.

Epic Video games has launched MetaHuman Animator, its much-anticipated facial animation and efficiency seize toolset for its MetaHuman framework.

The system streamlines the method of transferring the facial efficiency of an actor from footage captured on an iPhone or helmet-mounted digicam to a real-time MetaHuman character inside Unreal Engine.

Epic claims that it’s going to “produce the standard of facial animation required by AAA recreation builders and Hollywood filmmakers, whereas on the similar time being accessible to indie studios and even hobbyists”.

The toolset was introduced throughout Epic Video games’ State of Unreal keynote at GDC 2023 earlier this 12 months, and is now accessible within the newest model of its free MetaHuman plugin for Unreal Engine.

A part of Epic Video games’ framework for creating next-gen digital people for video games and animation

MetaHuman Animator is the newest a part of Epic Video games’ MetaHuman framework for creating next-gen 3D characters to be used in video games and real-time purposes – and likewise, more and more, in offline animation.

The primary half, cloud-based character-creation instrument MetaHuman Creator, which allows customers to design reasonable digital people by customising preset 3D characters, was launched in early entry in 2021.

Customers can generate new characters by mixing between presets, then adjusting the proportions of the face by hand, and customising readymade hairstyles and clothes.

The second half, the MetaHuman plugin for Unreal Engine, was launched final 12 months, and makes it attainable to create MetaHumans matching 3D scans or facial fashions created in different DCC apps.

Generates a MetaHuman character matching video footage of an actor

MetaHuman characters have facial rigs, so that they already supported facial movement seize, however to switch that movement from video footage of an actor with totally different facial proportions required handbook finessing.

MetaHuman Animator is meant to streamline that retargeting course of: a workflow that Epic Video games calls Footage to MetaHuman.

As with Mesh to MetaHuman, it generates a MetaHuman matching supply knowledge: on this case, video footage of an actor, and supporting depth knowledge – about which, extra later.

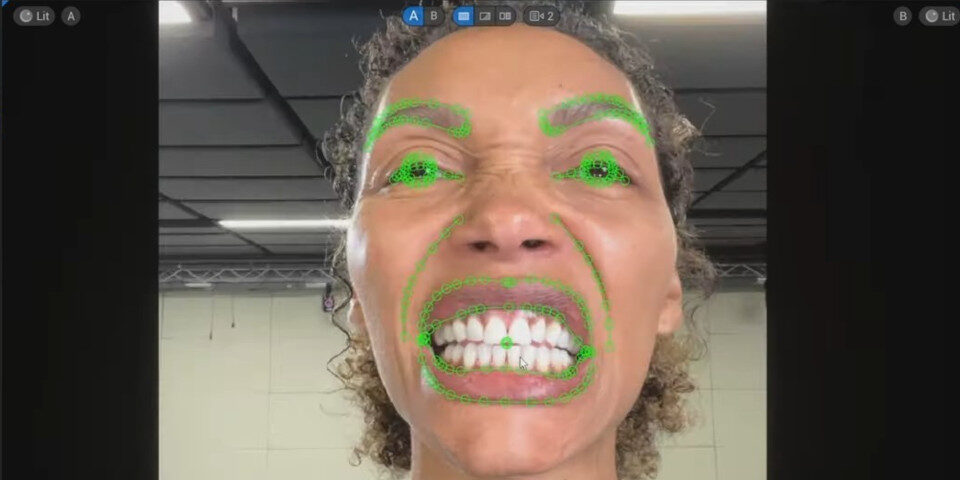

A ‘tooth pose’: one of many customary reference frames the MetaHuman Animator toolset makes use of to generate a MetaHuman character matching an actor’s facial proportions from video footage of that actor.

Works from one to 4 reference frames of an actor’s face

The method begins by ingesting the footage into Unreal Engine, and figuring out key reference frames from which the MetaHuman plugin can carry out a remedy.

On footage captured with knowledgeable digicam, solely a single body is important: a frontal view of the actor with a impartial facial features.

With iPhone footage, Epic recommends additionally figuring out left and proper views of the actor’s face to enhance the standard of the remedy.

An extra reference body exhibiting the actor’s uncovered tooth improves the standard of mouth animations.

The MetaHuman plugin then solves the footage to adapt a template mesh – a MetaHuman head – to the information. Customers can wipe between the 3D head and the supply picture to test the remedy.

The template mesh is then used to generate an asset that can be utilized for animation.

Processing is completed within the cloud – the one a part of the workflow that doesn’t run regionally – and the ensuing MetaHuman downloaded to Unreal Engine through Epic’s Quixel Bridge plugin.

Extract facial movement from video and apply it to a MetaHuman

The result’s a MetaHuman rig calibrated to the actor’s facial proportions.

The MetaHuman plugin can then extract facial movement from video footage of that actor and switch it to the 3D character, with the consumer in a position to preview the outcome within the viewport.

The animation can then be exported to Unreal Engine as a Degree Sequence or an animation sequence.

Exporting as an animation sequence makes it attainable to switch the facial animation seamlessly to different MetaHumans, that means that the actor’s efficiency can be utilized to drive any MetaHuman character.

Different advantages of the workflow

The management curves generated by the method are “semantically appropriate” – that’s, structured in the identical manner as they’d be if created by a human animator – making the animation simpler to edit.

MetaHuman Animator additionally helps timecode, making it attainable to sync the facial animation with full-body motion-capture; and might use the audio from the facial recording to generate tongue animation.

Works with something from iPhones to professional helmet-mounted cameras

MetaHuman Animator can also be designed to work with a full spectrum of facial digicam methods.

For indie artists, that features footage streamed from an iPhone utilizing Epic’s free Stay Hyperlink Face app.

Stay Stay Face 1.3, launched alongside MetaHuman Animator, updates the app to allow it to seize uncooked video footage and the accompanying depth knowledge required, the latter through the iPhone’s TrueDepth digicam.

Bigger studios can use customary helmet-mounted cameras: MetaHuman Animator works with “any skilled vertical stereo HMC seize answer”, together with these from ILM’s Technoprops division.

Value, launch date and system necessities

The MetaHuman Animator toolset is a part of Epic Video games’ free MetaHuman plugin. The plugin is appropriate with Unreal Engine 5.0+, however to make use of MetaHuman Animator reqires Unreal Engine 5.2+.

Stay Hyperlink Face is obtainable free for iOS 16.0 and above. To make use of it with MetaHuman Animator, you have to model 1.3+ of the plugin and an iPhone 12 or later.

MetaHuman Creator is obtainable in early entry. It runs within the cloud, and is appropriate with the Chrome, Edge, Firefox and Safari browsers, operating on Home windows or macOS. It’s free to be used with Unreal Engine.

Use of the Unreal Engine editor itself is free, as is rendering non-interactive content material. For recreation builders, Epic takes 5% of gross past the primary $1 million earned over a product’s lifetime.

Learn extra about MetaHuman Animator on Epic Video games’ weblog

Discover on-line documentation for MetaHuman Animator in Epic Video games’ new MetaHuman Hub

Obtain the free MetaHuman plugin for Unreal Engine, together with MetaHuman Animator

Tags: 3d character, animation, browser primarily based, Character Animation, cloud-based, digital human, documentation, obtain, Epic Video games, facial animation, facial movement seize, facial rig, Footage to MetaHuman, free, recreation artwork, recreation character, recreation growth, GDC 2023, head-mounted digicam, helmet-mounted digicam, iOS, iPhone, license circumstances, Stay Hyperlink Face, Maya, mesh to MetaHuman, MetaHuman, MetaHuman Animator, MetaHuman Creator, MetaHuman for Unreal Engine, MetaHuman Hub, MetaHuman plugin for Unreal Engine, movement seize, new options, efficiency seize, plugin, previs, Quixel Bridge, actual time, real-time character, launch date, retargeting, stream facial animation knowledge from an iPhone to a MetaHuman, system necessities, Technoprops, tongue animation, UE5, Unreal Engine, vfx, visible results, visualization