Glaze protects artists’ photos from ‘unethical AI fashions’

Wednesday, June twenty eighth, 2023 | Posted by Jim Thacker

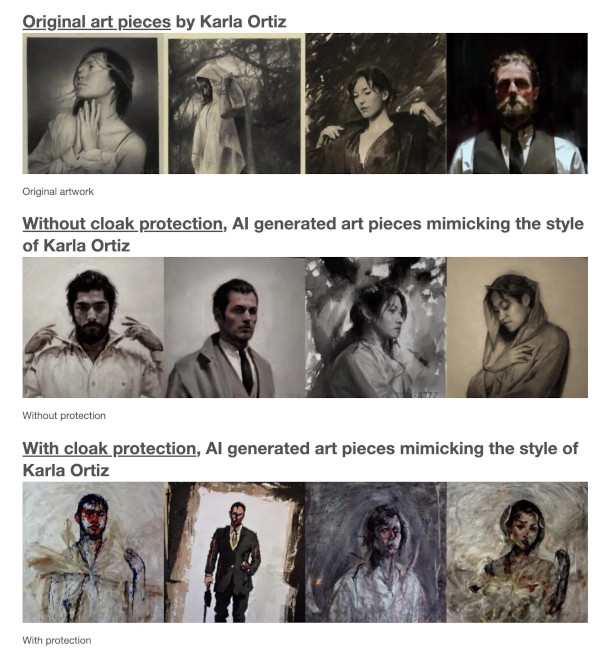

A part of Musa Victoriosa by Karla Ortiz: the “first portray launched to the world that makes use of Glaze, a protecting tech towards unethical AI/ML fashions”. The software program is now accessible to obtain free in beta.

Initially posted on 16 March 2023. Scroll down for particulars of the Glaze 1.0 launch.

Researchers on the College of Chicago have launched Glaze, a free instrument supposed to stop the fashion of an artist’s on-line photos being copied by generative AI artwork instruments.

The machine-learning-based instrument applies a ‘fashion cloak’ to photographs: barely visually perceptible modifications designed to mislead generative fashions that attempt to be taught the fashion of a particular artist.

A free instrument supposed to make it more durable for AI fashions to be taught artists’ types from on-line photos

The AI fashions behind new generative artwork instruments like Steady Diffusion and Midjourney had been educated on photos scraped from the web: one thing that has led to a class motion lawsuit towards their creators.

You will discover a abstract of the authorized points concerned in for this text in The Verge.

Glaze is meant to make it more durable for brand spanking new AI fashions to be taught artists’ private types from photos in on-line galleries, by enabling artists to use a ‘fashion cloak’ to the pictures they add.

In accordance with Glaze’s builders, AI fashions educated on cloaked photos be taught a special fashion to the artist’s personal, making it tougher to copy by text-to-image prompts.

The instrument is being developed by researchers on the College of Chicago in collaboration with artists together with Karla Ortiz, one of many litigants within the class motion swimsuit.

Glaze makes it more durable for AI fashions to be taught an arist’s fashion from on-line artwork. Picture supplied by College of Chicago professor and Glaze staff lead Ben Zhao for this text on Glaze within the New York Instances.

Trades energy of safety towards processing time and visual modifications to the picture

Glaze itself makes use of machine studying strategies to generate the fashion cloak, with the software program’s Render High quality parameter figuring out how a lot compute time it spends trying to find the optimum consequence.

Customers may also set the Depth of the fashion cloak, with greater values leading to stronger safety however normally additionally extra seen modifications to the paintings.

In accordance with the builders, artwork types with ‘smoother surfaces’ like character design and animated artwork are “extra weak to safety elimination” and require greater Depth settings.

After a picture is modified, Glaze checks the effectiveness of the consequence, and warns the consumer if it fails to supply sufficient safety.

Present limitations

Processing a picture with Glaze requires a reasonably highly effective machine, and a good period of time: the best Render High quality “takes round 60 minutes per picture on a private laptop computer”.

That makes processing a complete on-line portfolio a big funding of effort, with no absolute assure that the safety supplied won’t be bypassed by new AI fashions in future.

The event staff describes cloaking photos as a “crucial first step” till “long run (authorized, regulatory) efforts take maintain”.

Glaze’s on-line FAQs present extra data on whether or not will probably be potential for future AI fashions to avoid the safety it supplies, and are price studying in the event you plan to make use of the software program.

Curiously, whereas cloaking photos won’t have an effect on AI fashions educated on an artist’s current photos in on-line galleries, the FAQs recommend that importing new cloaked photos will scale back the accuracy with with AI artwork instruments can reproduce that artist’s fashion in future updates to these instruments.

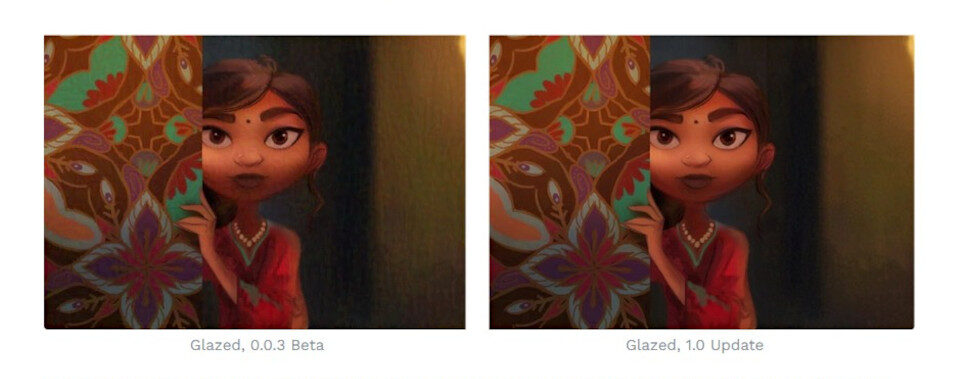

A picture by Oscar Araya ‘glazed’ utilizing the beta model of the software program (left) and Glaze 1.0 (proper), the brand new secure launch, which causes much less perceptible change to the picture.

Up to date 28 June 2023: The Glaze growth staff has launched Glaze 1.0.

In addition to formally shifting the software program out of beta, the replace reworks the core algorithm to higher emulate human notion, focusing modifications in areas of a picture that may have much less visible affect.

The consequence must be a much less noisy-looking cloaked picture: you may see a side-by-side comparability of the output of Glaze 1.0 and the unique beta above.

As well as, the software program ought to work higher on photos with massive areas of flat color or color gradients, like comics, manga and anime.

Glaze additionally now presents “restricted safety” towards img2img instruments, which generates a picture primarily based on a supply picture in addition to a textual content immediate.

The builders have up to date the on-line FAQs to debate “img2img assaults” and makes an attempt by different builders to bypass Glaze’s cloaking impact.

Licensing and system necessities

Glaze is on the market for Home windows and macOS 13.0+. It helps each Intel and Apple Silicon Macs. The builders plan to launch a Glaze internet service “later this summer time”.

The software program is free, and is on the market below a customized EULA.

Learn extra about Glaze on the product web site

Obtain free AI image-cloaking instrument Glaze

Tags: AI artwork, anime, Ben Zhao, cloak on-line photos from AI artwork instruments, comedian artwork, obtain, EULA, FAQs, free, generative AI, Glaze, Glaze 1.0, img2img, Karla Ortiz, limitations, machine studying, manga, Midjourney, forestall on-line photos from getting used to coach AI fashions, restrictions, Stability.ai, Steady Diffusion, fashion cloak, system necessities, consumer information